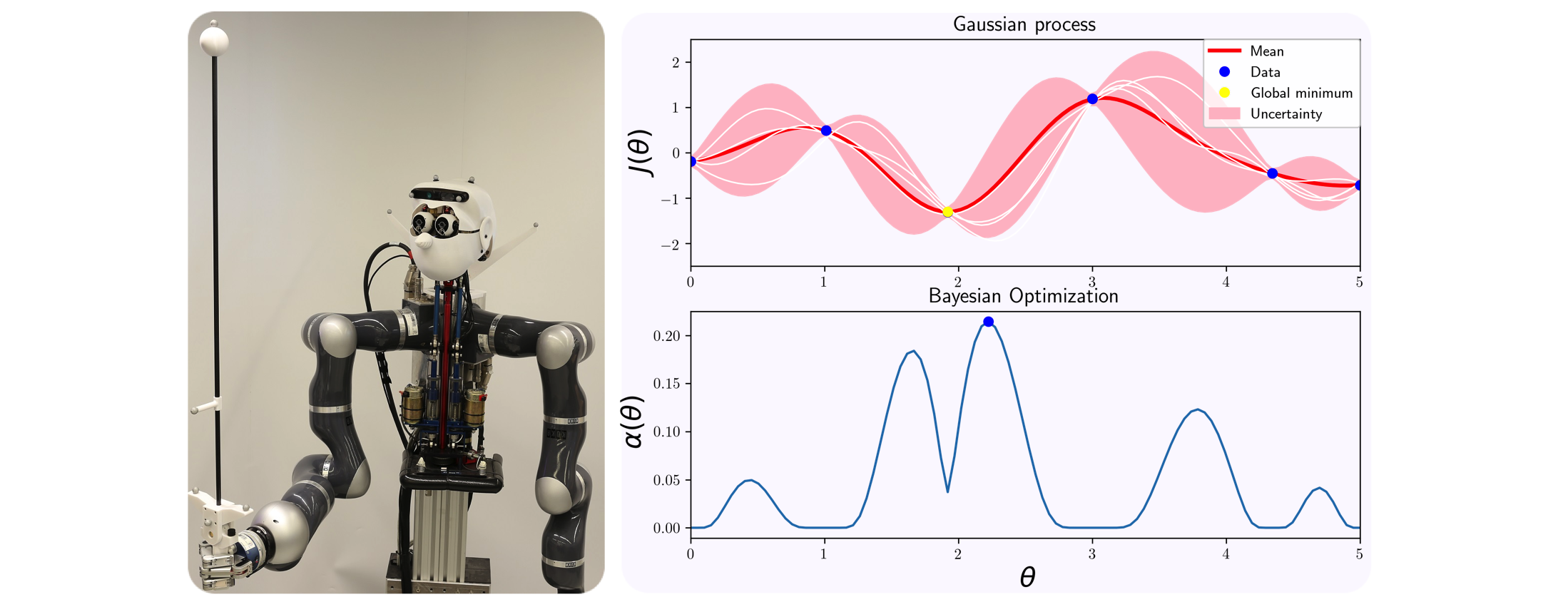

Left: Humanoid robot Apollo learning to balance an inverted pole using Bayesian optimization. Right: One-dimensional synthetic example of an unknown cost J($\theta$) modeled as a Gaussian process for controller parameter $\theta$, conditioned on observed data points. The next controller to evaluate is suggested by the Bayesian optimizer where the acquisition function $\alpha(\theta)$ finds its maximum.

Autonomous systems such as humanoid robots are characterized by a multitude of feedback control loops operating at different hierarchical levels and time-scales. Designing and tuning these controllers typically requires significant manual modeling and design effort and exhaustive experimental testing. For managing the ever greater complexity and striving for greater autonomy, it is desirable to tailor intelligent algorithms that allow autonomous systems to learn from experimental data. In our research, we leverage automatic control theory, machine learning, and optimization to develop automatic control design and tuning algorithms.

In [ ], we propose a framework where an initial controller is automatically improved based on observed performance from a limited number of experiments. Entropy Search (ES) [ ] serves as the underlying Bayesian optimizer for the auto-tuning method. It represents the latent control objective as a Gaussian process (GP) (see above figure) and sequentially suggests those controllers that are most informative about the location of the optimum. We validate the developed approaches on the experimental platforms at our institute (see figure).

We have extended this framework into different directions to further improve data efficiency. When auto-tuning real complex systems (like humanoid robots), simulations of the system dynamics are typically available. They provide less accurate information than real experiments, but at a cheaper cost. Under limited experimental cost budget (i.e., experiment total time), our work [ ] extends ES to include the simulator as an additional information source and automatically trade off information vs. cost.

The aforementioned auto-tuning methods model the performance objective using standard GP models, typically agnostic to the control problem. In [ ], the covariance function of the GP model is tailored to the control problem at hand by incorporating its mathematical structure into the kernel design. In this way, unforeseen observations of the objective are predicted more accurately. This ultimately speeds up the convergence of the Bayesian optimizer.

Bayesian optimization provides a powerful framework for controller learning, which we have successfully applied on very different settings: humanoid robots [ ], micro robots [ ] and automotive industry [ ].

Automatic LQR Tuning Based on Gaussian Process Global Optimization

This video demonstrates the Automatic LQR Tuning algorithm for automatic learning of feedback controllers. The auto-tuning method is based on Entropy Search, a Bayesian optimization algorithm for information-efficient global optimization.

ICRA 2017 Spotlight presentation

Presentation of our paper "Virtual vs. Real: Trading Off Simulations and Physical Experiments in Reinforcement Learning with Bayesian Optimization" published in 2017 IEEE International Conference on Robotics and Automation (ICRA), May 29 - June 3, Singapore.

Virtual vs. Real: Trading Off Simulations and Physical Experiments in Reinforcement Learning with Bayesian Optimization

Video explanation of our paper "Virtual vs. Real: Trading Off Simulations and Physical Experiments in Reinforcement Learning with Bayesian Optimization" published in 2017 IEEE International Conference on Robotics and Automation (ICRA), May 29 - June 3, Singapore.

On the Design of LQR Kernels for Efficient Controller Learning - CDC presentation

Presentation of our paper "On the Design of LQR Kernels for Efficient Controller Learning", in 56th IEEE Annual Conference on Decision and Control (CDC), Melbourne, Australia, Dec 2017.