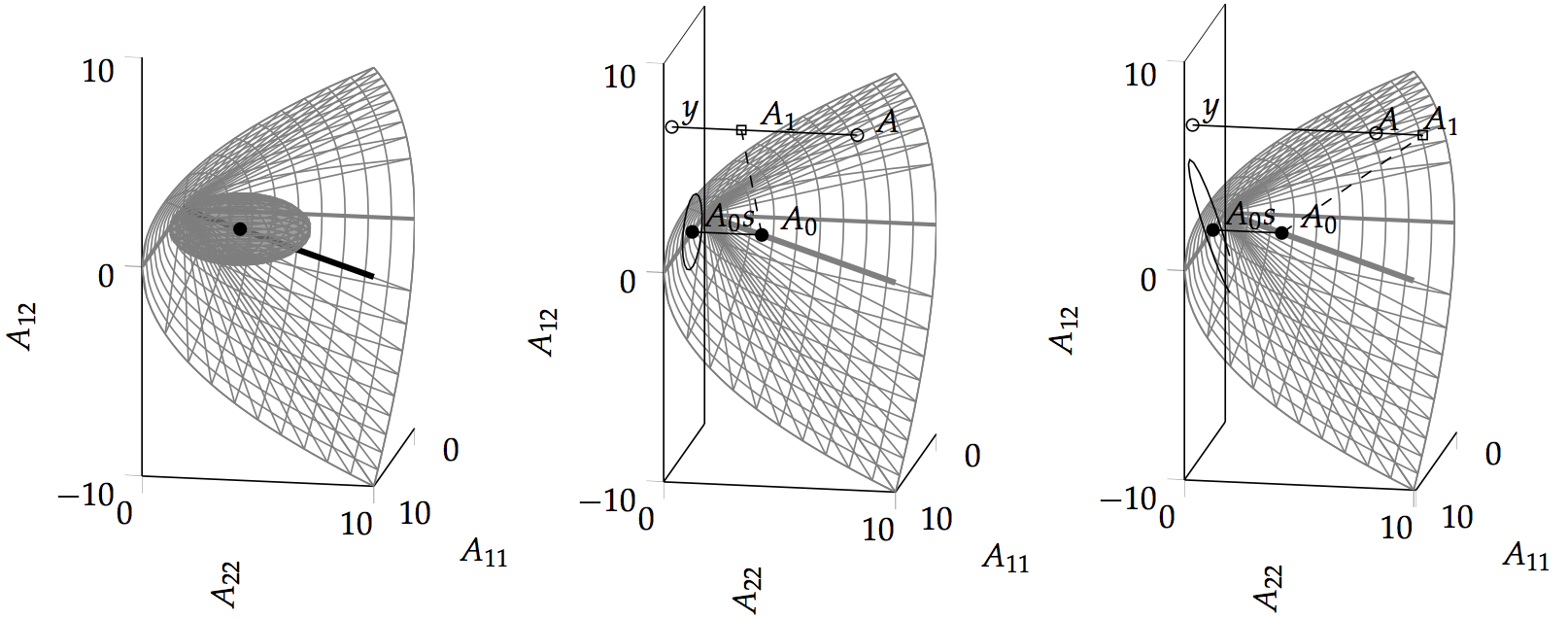

Probabilistic linear algebra methods assign matrix-valued probability measures to unknown matrix quantities; for example to elements of the positive definite cone.

Linear algebra methods form the basis for the majority of numerical computations. Because of this foundational, ``inner-loop'' role, they have to satisfy strict requirements on computational efficiency and numerical robustness.

Our work has added to a growing understanding that many widely used linear solvers can be interpreted as performing probabilistic inference on the elements of a matrix or a vector from observations linear projections of this latent object. In particular, this is true for such foundational algorithms as the method of conjugate gradients and other iterative algorithms in the conjugate directions and projection method classes [ ].

Our ongoing research effort focusses on ways to use these insights in the large-scale linear algebra problems encountered in machine learning. There, the most frequent linear algebra task is the least-squares problem of solving

$$ Kx = b $$

where $K$ is a very large symmetric positive definite matrix (e.g. a kernel Gram matrix, or the Hessian of a deep network loss function). A key challenge in the big data regime is that the matrix --- defined as a function of a large data-set --- can only be evaluated with strong stochastic noise caused by data sub-sampling. Classic iterative solvers, particularly those based on the Lanczos process, like conjugate gradients, are known to be unstable to such stochastic disturbances, which is part of the reason why second-order methods are not popular in deep learning. In recent work we have developed and tested extensions to classic solvers that remain stable [ ] and tractable in this setting by efficiently re-using information across many interations [ ].