Computation is Inference

What does it mean to compute a number? For simple operations like divisions, floating point arithmetic gives a concise and formalized answer: A computer can compute such numbers in a fixed amount of time, to a specified precision. But the situation is more complicated when the quantity to be computed is described in terms of more advanced mathematical operations. There are no general solutions to tasks like finding the extremum of a function, the value of an integral, the solution to a linear system of equations, or to a differential equation. Methods designed to find approximate solutions to such jobs are known as numerical algorithms. Numerical methods are inherentely imperfect. They excel on some tasks and fail on others, and they do not always `notice' the difference. Some numerical methods are expensive and slow, but work on a large class of problems. Others are fickle, specific to a small domain, on which they nevertheless work extremely well.

The computational cost of contemporary machine learning is dominated by such numerical tasks, and the properties of the algorithms used to solve them:

- Optimization methods (sgd, quasi-Newton, Frank-Wolfe,...) train and fit estimators,

- Integration algorithms (MCMC, free energy methods, quadrature,...) compute marginals, conditionals and expectations in probabilistic inference,

- Linear algebra tools (Gauss-Jordan, conjugate gradients, Cholesky decompositions,...) solve elementary tasks like least-squares estimation and do the heavy lifting in the innermost loop of many more high-level numerical methods

- Differential equations are solved to simulate, predict and control future states of the environment

As a young field, machine learning has been able to borrow many extremely well-designed numerical algorithms from other fields. Some of these (e.g. linear algebra subroutines) are so good that people have essentially stopped thinking of them as algorithms with properties and flaws, and blindly trust the black box. This confidence is not always justified, for machine learning and data science poses some new numerical challenges that are not addressed well by classic methods. An example is the strong computational noise caused by data sub-sampling in big-data applications, which can make advanced optimization methods (like quasi-Newton) essentially unusable in applications like deep learning.

The improve this situation, our group aims to develop and broaden the understanding of numerical algorithms for intelligent systems, in the language and the setting of probabilistic machine learning. Our core tenet is that machine learning not just poses new challenges for numerics, it can also offer its own contributions to their solution, because numerical methods are elementary learning machines themselves. A numerical method estimates an unknown, latent quantity (e.g. an integral), given the value of certain tractable, observable quantities (values of the integrand at discrete locations). So they solve an inference task. But numerical methods are not passive statistical estimators; they actively decide, often in a closed feedback loop, which numbers to compute. They thus really are elementary autonomous agents.

In our work, we phrase this active inference process probabilistically, as the actions of an agent equipped with a notion of uncertainty about its task, captured by a probability measure. Exact computations performed on a chip provide information about the analytically intractable task, yielding a posterior probability measure whose location and width should ideally provide a point estimate and meaningful surrounding uncertainty over the true solution. Such algorithms are known as probabilistic numerical methods. Over the past years, we have helped establish this young research field, together with international collaborators.

On the theoretical side, we have contributed in-depth analysis of classic numerical algorithms to show that probabilistic numerical algorithms can be as fast, reliable and flexible as the widely used classics — simply because the classics already are probabilistic numerical methods, they just are not usually presented in this way. They can thus serve as a point of reference from which one can venture out to create new functionality for contemporary challenges. We also showed that in some cases (like optimization for deep learning), the probabilistic viewpoint can help uncover the cause for failures and problems of state-of-the-art methods, and suggest fixes.

For practitioners, our algorithms offer tangible improvements, some of which have helped our work enter into industrial use. Our Bayesian optimization framework Entropy Search has been used for advanced experimental design in areas like robotics and automated machine learning. For deep learning, we have contributed several tools that automate algorithmic hyperparameters in the inner loop, freeing users from tedious and wasteful tasks like step-size adaptation (some of our work can also be directly accessed as code packages on our github page). As the theoretical foundations of probabilistic numerics continue to expand and improve, we now increasingly turn our attention to develop practical algorithms for and in machine learning.

Last but not least, we have also invested in the establishment of a dedicated research community. We created a community website and organized and supported several international workshops and meetings. Partly as a result of this, there is now a nascent by rapidly growing international community studying both the theory and practice of probabilistic numerics.

History of the Group

The Probabilistic Numerics group at the MPI for Intelligent Systems was initially founded and funded, in 2015, as an Emmy Noether Research group of the German Research Union (DFG). In late 2016, it became one of the first independent Max Planck Research Groups of the MPI for Intelligent Systems. In 2017 our work let to the award of an ERC Starting Grant, which in turn led to several offers from German universities to move the group to a more permanent home. In May 2018, Philipp Hennig was appointed as a full professor at the Eberhard Karls University of Tübingen, where the group now lives on in a new form, as the Chair for the Methods of Machine Learning.

Probabilistic Methods for Nonlinear Optimization

Optimization problems arising in intelligent systems are similar to those studied in other fields (such as operations research, control, and computational physics), but they have some prominent features that set them apart, and which are not addressed by classic optimization methods. Numerical optimization is a domain... Read More

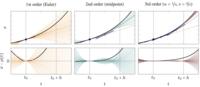

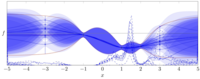

Probabilistic Solvers for Ordinary Differential Equations

Solvers for ordinary differential equations (ODEs) belong to the best-studied algorithms of numerical mathematics. An ODE is an implicit statement about the relationship of a curve $x:\mathbb{R}_{\geq 0}\to\mathbb{R}^N$ to its derivative, in the form $x'(t) = f(x(t),t)$, where $x'$ is the derivative of cu... Read More

Bayesian Optimization

Bayesian Optimization is an increasingly popular approach to industrial and scientific prototyping problems. The basic premise in this setting is that one is looking for a location $x$ in some domain where a fitness function $f(x)$ is (globally) minimized. The additional, sometimes implicit, assumption is t... Read More

Probabilistic Methods for Linear Algebra

Linear algebra methods form the basis for the majority of numerical computations. Because of this foundational, ``inner-loop'' role, they have to satisfy strict requirements on computational efficiency and numerical robustness. Our work has added to a growing understanding that many widely used&... Read More